I’ve decided to do a new small new series to share my knowledge on 3D engines & games. This first one will be dedicated to a general introduction to 3D engines. More specifically, why and how we try to target 60 fps. I’ll also explain why 30 fps on consoles, and 90+ fps in VR. We’ll try to understand what are the physiological limits of the human eye to process motion. At last, we’ll see what VSync is and why variable refresh rate (or VRR) was the solution we were waiting for!

The goal of 3D engines is to create realistic real-time rendering of 3D environments, objects and characters. The idea is basically to try to imitate the “real world” as realistically as possible in terms of graphic quality and smoothness. To reach 60 fps, we’re using lot of smart tricks to simulate materials, as well as lighting & shadows for instance to try to use our available power in the most efficient way.

But do you know why we often target 60 fps as the ultimate goal for video games enthusiasts?

Tesla, at the origin of 60 fps

We need to go back in history to the choice made by Tesla a long time ago (the genius engineer not the car manufacturer). What? Tesla is at the origin of 60fps in video games? Yes!

As explained in this article: Why Is the US Standard 60 Hz?, when he was researching on how to build and distribute electricity, Tesla “figured out that 60 Hz at 220 Vac was the most efficient”. Still, because of tradeoff made with Edison, the US ended up having 110V 60Hz AC. In Europe, we decided to go for 220V 50Hz.

But why am I sharing that with you? Because, this has driven the choice for the refresh rates of CRT-based TVs and monitors! This is perfectly explained in this article: What so special about the 60Hz refresh rate? :

“When electronic displays became common, with the dawn of commercial television, they were all CRT-based – and CRTs are very sensitive to external magnetic fields. To minimize possible problems from this source, the vertical frequency of CRT televisions was chosen to match that of the power lines: 60 Hz in North America*, and 50 Hz in Europe. (Due to bandwidth limitations in the broadcast TV system, interlaced scanning was used, so these were actually the field rates rather than the frame rates.) Later, as personal computer displays started to show up on the market in the late 1970s and early 1980s, these were also of course CRT-based and used TV-like timings for the same reasons. The original 60 Hz “VGA” timing standard (640 x 480 pixels at 60 Hz) was actually a progressive-scan version of the standard North American TV timing <…>

Today, of course, LCDs and other flat-panel technologies don’t have the same concerns as the CRT did, but the huge amount of existing 50/60 Hz video has caused these rates to be carried on in modern TV and computer timing standards.”

As explained, due to bandwidth limitation in the broadcasting, we weren’t viewing 50 or 60 fps videos on our CRT TV but half of it (interlacing was using 1 line out of 2) which results in 25 fps in Europe and 30 fps in the US. You now know why videos are displayed in 30 fps in US and 25 fps in Europe. I won’t explain why movies / cinemas are using 24 fps, you can easily find the historical reason for that in your favorite search engine. Spoiler: it was purely for economic considerations; it’s unfortunately not linked to our physiological capacities.

Today, most of our LCD-panels on our laptops or TVs have a refresh rate of 60 Hz. That’s why, we try to generate 60 frames per second to be perfectly aligned with this refresh rate.

By the way, please note that refresh rate and frame rate are different. Refresh rate has just been explained before. It’s the speed at which your monitor / TV will update the screen every second. Generally, it does refresh by displaying line by line from the top of the screen down to the bottom. The frame rate is the speed at which your computer will generate new frames every second. Obviously, those 2 rates won’t always be synchronized. The graphic card can generate less frames per second than your monitor can refresh and vice-versa. We will see later the various strategies to manage that by explaining what’s Vsync.

But before, let’s briefly try to understand the hard job of a game developer.

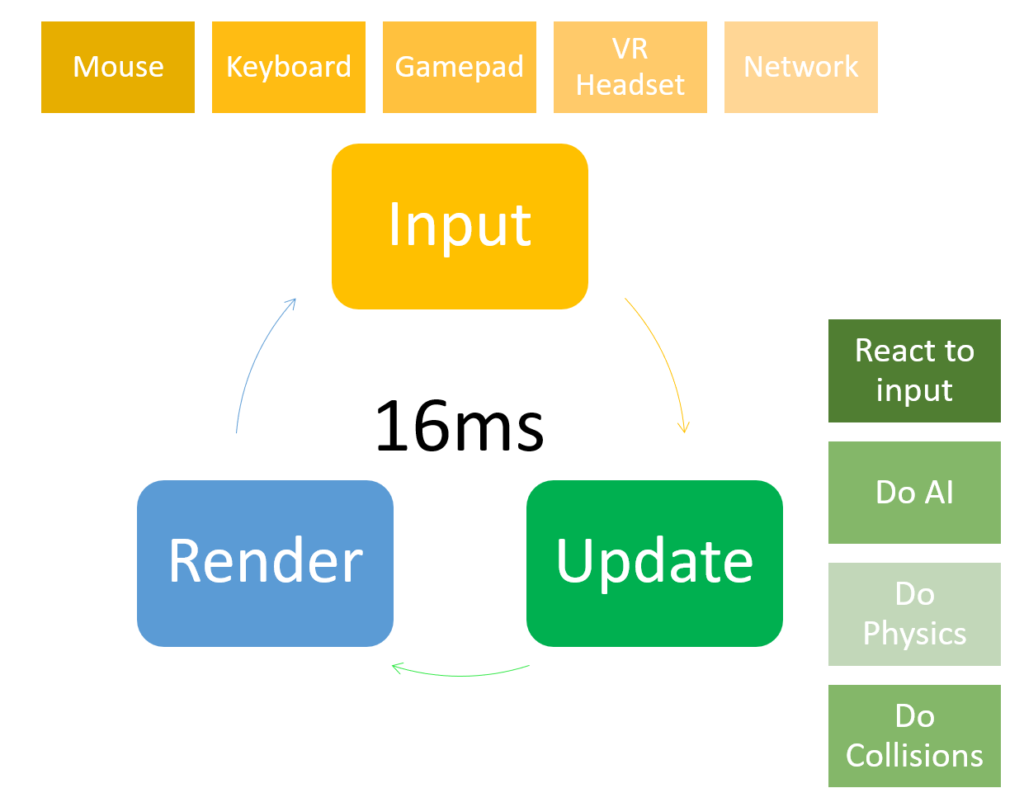

16ms to do all your tasks

Real-time means you have less than 16ms to compute a new frame if you’d like to target 60fps on PC or 33ms for 30fps on console. Targeting VR? You have now only 11ms for the 90fps target. We will see why those specific number below. But trust me, this is a very constraint world. It’s super complex to achieve. Indeed, as a game developer, inside the game loop, you should also manage the AI of enemies, the physics engine, the collision engine, sounds effects, inputs, loading resources from your hard drive or SSD, managing the network and so on. If one of those components takes too much time and raise the render loop time just by a couple of ms above your target, the framerate will go down and the experience will suffer. You’ll miss the 16ms target and thus, the framerate will be lower than 60 fps. And here comes the drama in social networks. 😉

A 3D engine is then all about clever optimizations to approximate photorealistic rendering with the fewer resources possible. It’s a battle, or a game for some, against the machine to use its power in the most efficient way. We can also consider that 3D engines are cheating. They try to make you think it’s real by using sometimes some very smart optical tricks. We’ll see that in the next article.

The two main components used are the CPU (or we could even say multi-CPUs as all CPUs are multicores today) and the GPU which embeds hundreds (if not thousands) of dedicated cores. Obviously, the GPU will do most of the job of the 3D rendering task but still, the CPU as well as the data sent between the CPU and GPU are really import parts of the performance. The complete architecture has a huge impact on the global performance and quality of the rendering: the quantity of memory and its bandwidth, the various bus between each component, dedicated hardware, the type and speed of the drive (SSD or not?). Today, we’re often talk about TeraFLOPS or TFLOPS to evaluate the power of a machine. It’s indeed an interesting indicator of the performance of a system but we can’t fully rely on it. 2 systems with similar TFLOPS can still show significant performance differences based on their architecture. Still, if one of them got a truly higher TFLOPS, it will have faster or better rendering for sure.

Going back to the CPU. If it takes too much time to do some of its tasks (AI, Physics or load data from the hard drive for instance), the GPU will have to wait and won’t be able to target the ideal FPS. You will be in a CPU-bound scenario. The GPU won’t be used at its maximum capacity. Indeed, never forget than 16ms/60fps is the time allocated for the complete machine to deliver its tasks, CPU, GPU, memory, I/O, inputs. And to be honest, it’s often on the CPU side where the bottleneck is. That’s why, a very big GPU could be useless if the underlying CPU or architecture is not serving it properly.

In the other way, there could be cases where the 3D scene is too complex to be rendered: too highly detailed models’ geometry (polygons), too complex shaders, etc. Then the GPU will struggle and will need more than the 16/33ms to be rendered. You’ll be GPU-bound.

Writing a performant 3D game is a true art of balancing all those constraints. As you could expect, the right balance is easier to find on a fix hardware configuration (such as the consoles) rather on PC with the heterogenous and rich diversities of components. That’s why, you have more options on PC to tune this balance yourself in order to push the cursor rather on graphics quality or performance, based on your preferences.

But what if the game can’t reach the target frame rate (30 or 60 fps)? Using profilers, you will have first to find where the bottleneck is and make a choice. If it’s the CPU, maybe there are too many objects to update on screen, let’s lower this number. If it’s the GPU, maybe the 3D models are too detailed, let’s simplify them. You’d like to keep the current rendering quality for artistical reasons but can’t reach 60 fps? Target 30 fps if it doesn’t hurt the gameplay. We even have tools now that are super-efficient such as Simplygon to help us doing this task. The 3D artist and the level design job are also super critical. They have to understand the architecture of a gaming machine to build their assets in the most optimized way. It’s really about balancing a complex equation.

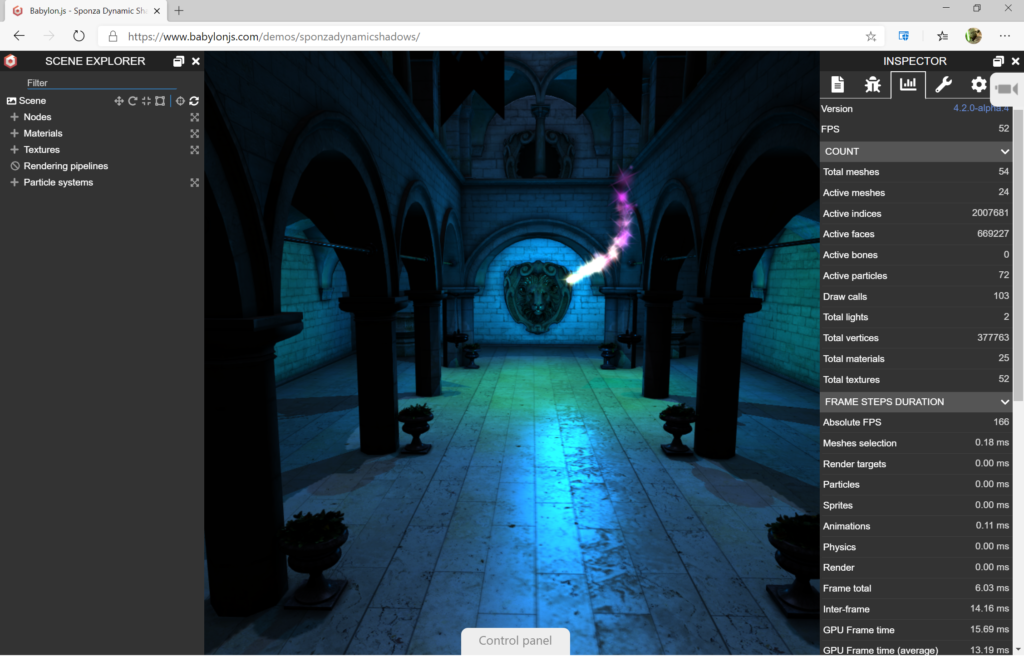

To illustrate that, let’s play with a real interactive demo in your browser. We’re going to play with Babylon.js, an open-source WebGL engine built and used by Microsoft for the web. Open the following Babylon.js demo: https://www.babylonjs.com/demos/sponzadynamicshadows/ and click on “Control panel” and “Debug”:

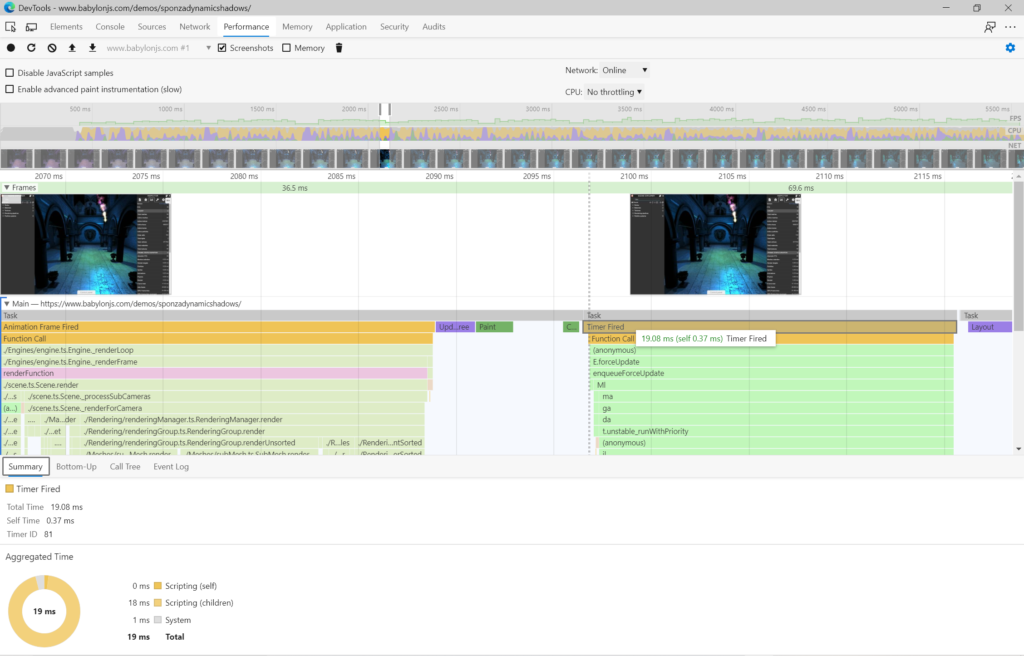

This will display our inspector pane on the right. Then, search for the stats tab. Our engine is showing you what takes the most time for each rendering pass. This specific demo doesn’t run at 60 fps on the machine where I’m writing this article. It’s between 50 and 60 fps. Still, as you can see in the screenshot, the “Frame total” time is way below 16ms, around 6ms. This means the GPU is not the bottleneck. If the CPU was strong enough and the screen was allowing it, the GPU could generate up to 166 fps for this specific scene. Using the Edge / Chrome developer tools (pressing F12 on a PC), you can even further review the cause of this thanks to the Performance tab:

In this screenshot, we see that the game loop (manage by the requestAnimationFrame API in JavaScript) took 19 ms to fully execute which translates to 52 fps. As the GPU only need 6 ms to render the frame, we’re under the 60 fps threshold because of the CPU. We’re CPU-Bound. You’ll find plenty of other demos to play with on our playground: https://playground.babylonjs.com/ if you’re interested in hacking more around this topic.

What’s the limit of the human eye and why 60 fps is better than 30?

We need to solve a common question people have around frame rate. Can we really see and feel the difference between 30 and 60 fps or even more? What is the physiological limit of the human eyes and our brain?

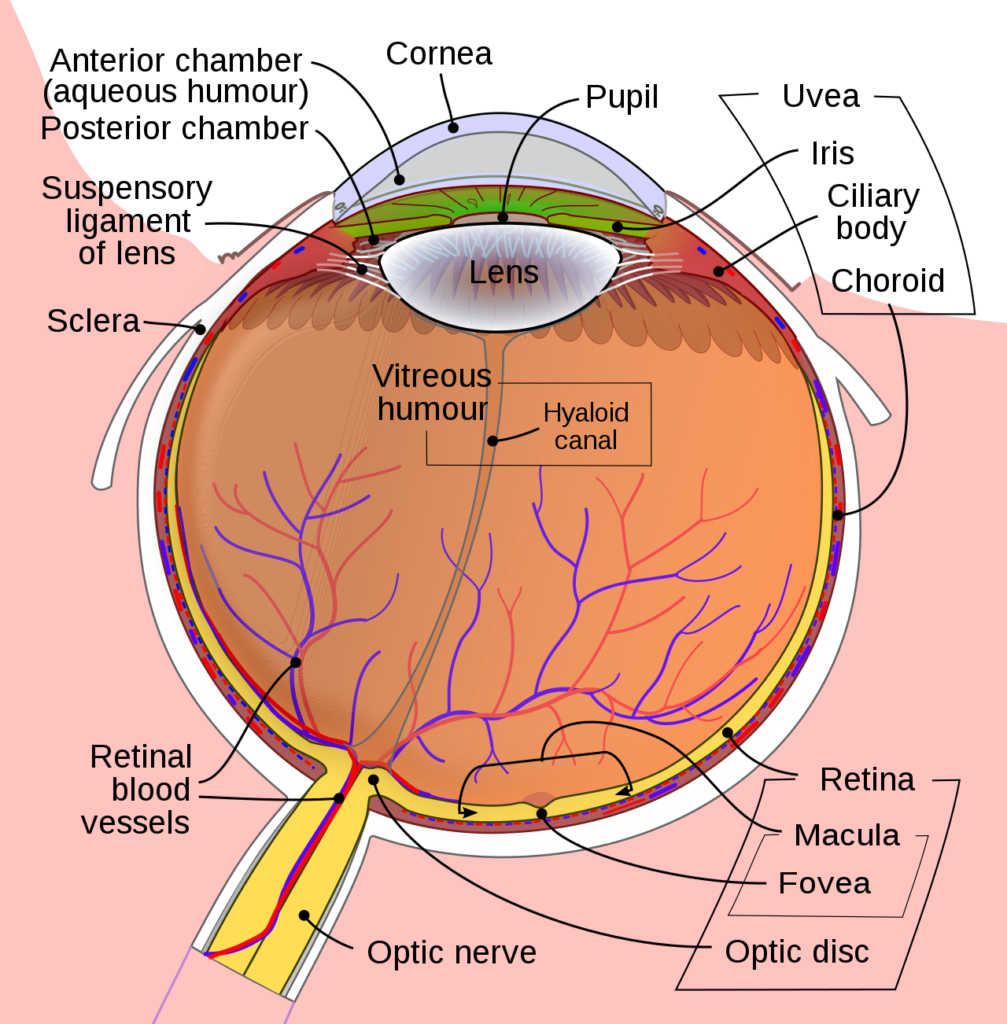

By far the best article I’ve found dealing with this question is: Why movies look weird at 48fps, and games are better at 60fps, and the uncanny valley…. You should really read this article to better understand how our eye is working. To my point of view, this is the key part is: “Ocular microtremor is a phenomenon where the muscles in your eye gently vibrate a tiny amount at roughly 83.68Hz (on average, for most people). (Dominant Frequency Content of Ocular Microtremor From Normal Subjects, 1999, Bolger, Bojanic, Sheahan, Coakley & Malone, Vision Research). It actually ranges from 70-103Hz.”. It then explains why the minimum target is around 41 fps to have the best results to extract spatial information.

This awesome article also explains the importance choice between higher resolution and better frame rate: “Higher resolution vs. frame rate is always going to be a tradeoff. That said, if you can do >~38-43 fps, with good simulated grain, temporal antialiasing or jitter, you’re going to get better results than without. Otherwise jaggies are going to be even more visible, because they’re always the same and in the same place for over half of the ocular microtremor period. You’ll be seeing the pixel grid more than its contents. The eye can’t temporally alias across this gap – because the image doesn’t change frequently enough.

Sure, you can change things up – add a simple noise/film grain at lower frame rates to mask the jaggies – but you may get better results in some circumstances at > 43fps with 720p than at 30fps with 1080p with jittering or temporal antialiasing – although past a certain point, the extra resolution should paper over the cracks a bit. At least, that is, as long as you’re dealing with scenes with a lot of motion – if you’re showing mostly static scenes, have a fixed camera, or are in 2D? Use more pixels.”

Thanks to the researches done in this article, we have factual and scientific explanations on why 60 fps is better than 30.

There are plenty of other articles that I’ve found with less factual references. This one is a good example: What is the highest frame rate (fps) that can be recognized by human perception? At what rate do we essentially stop noticing the difference?. I often read that you can see a 1000Hz frequency flash in a dark room, which is totally true. But does this mean you can process a moving object at 1000fps? Based on the previous article, I don’t think so. There seems to be a huge difference between stimulating your eye with super-fast image and your capacity to process and extract spatial information out of it.

This is confirmed by this other great article I would recommend reading: How many frames per second can the human eye really see? And here are the main parts:

“The first thing to understand is that we perceive different aspects of vision differently. Detecting motion is not the same as detecting light. Another thing is that different parts of the eye perform differently. The centre of your vision is good at different stuff than the periphery. And another thing is that there are natural, physical limits to what we can perceive. It takes time for the light that passes through your cornea to become information on which your brain can act, and our brains can only process that information at a certain speed”

By the way, it partly explains why we render at 90 fps in VR: “But out in the periphery of our eyes we detect motion incredibly well. With a screen filling their peripheral vision that’s updating at 60 Hz or more, many people will report that they have the strong feeling that they’re physically moving. That’s partly why VR headsets, which can operate in the peripheral vision, update so fast (90 Hz).”. It’s also because some studies show that 90 fps is reducing motion sickness in VR.

The conclusion is interesting:

- Some people can perceive the flicker in a 50 or 60 Hz light source. Higher refresh rates reduce perceptible flicker.

- We detect motion better at the periphery of our vision.

- The way we perceive the flash of an image is different than how we perceive constant motion.

- Gamers are more likely to have some of the most sensitive, trained eyes when it comes to perceiving changes in imagery.

- Just because we can perceive the difference between framerates doesn’t necessarily mean that perception impacts our reaction time.

Combining those 2 articles would indicate that going higher than 120 fps would be useless for most people but there are still passionate debates on this. But for sure, we know you can feel the difference between 30 and 60 fps.

This explains also why the new Variable Rate Shading (VRS) is really interesting. The center of our vision is very sensitive to details where as the periphery is less sensitive to detail but more to motion. Let’s benefit from that by lowering the quality of the rendering on the periphery (edges of the images) in order to boost the FPS to satisfy better motion perception.

The new Xbox Series X will support this technology.

Additional resources on this topic from Wikipedia:

- Frame rate: “The temporal sensitivity and resolution of human vision varies depending on the type and characteristics of visual stimulus, and it differs between individuals. The human visual system can process 10 to 12 images per second and perceive them individually, while higher rates are perceived as motion.[1] Modulated light (such as a computer display) is perceived as stable by the majority of participants in studies when the rate is higher than 50 Hz. This perception of modulated light as steady is known as the flicker fusion threshold. However, when the modulated light is non-uniform and contains an image, the flicker fusion threshold can be much higher, in the hundreds of hertz.[2] With regard to image recognition, people have been found to recognize a specific image in an unbroken series of different images, each of which lasts as little as 13 milliseconds.[3] Persistence of vision sometimes accounts for very short single-millisecond visual stimulus having a perceived duration of between 100 ms and 400 ms. Multiple stimuli that are very short are sometimes perceived as a single stimulus, such as a 10 ms green flash of light immediately followed by a 10 ms red flash of light perceived as a single yellow flash of light.[4]”

- Motion perception

So, we’ve seen how sensitive we are to FPS as human being but what about the way the hardware and rendering are working?

Strategies to adapt the frame rate to the refresh rate

As explained before, the frame rate is the raw capacity of your machine/GPU to generate a certain number of frames per second (FPS) whereas the refresh rate is the speed at which the hardware (the monitor) clean and draw a new image on screen. This is expressed in Hz. The refresh rate of a monitor is fixed. Most of the time, it will be at 60 Hz. Which means that whatever you’ll feed with, it will update itself every 1/60s = 16,66ms. The FPS can of course vary depending on the current load of the GPU or if the CPU is struggling managing the game logic. In conclusion, most of the time, you have to fit a variable frame rate inside a fixed refresh rate. So how to deal with that?

V-Sync on or off? Stuttering or tearing?

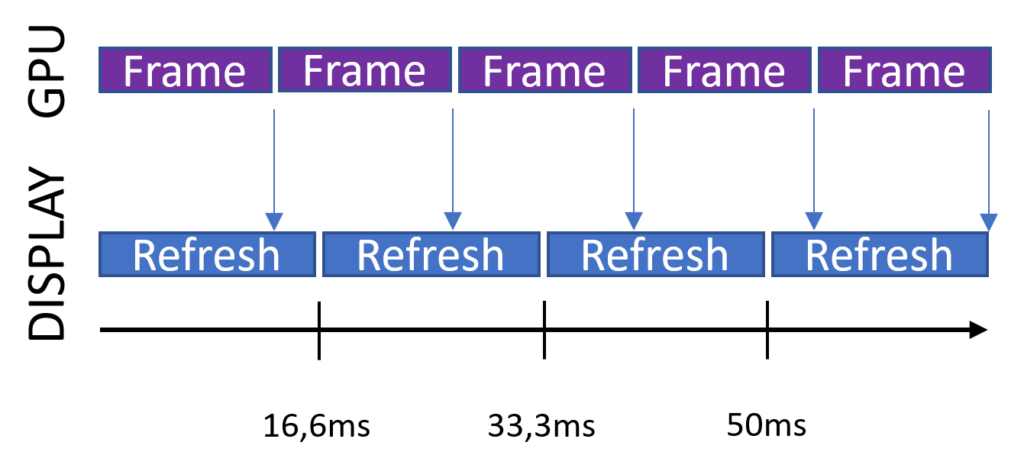

What’s V-Sync? V-Sync stands for Vertical synchronization or synchronizing with the vertical refresh rate of the monitor. As explained before, almost all monitors run at a fixed refresh rate of 60 Hz. By default, your monitor will ask to your GPU to provide a new frame every 16.6ms. Then, it will draw it on screen line by line from the top to the bottom.

But then you have 2 choices: either you’re synchronizing the frame rate to the vertical refresh rate, or you simply don’t synchronize with the refresh rate and send the frames as-is.

Let’s first review the non-synchronized approach (Vsync off).

V-Sync off

If you’re generating more frames per second than the refresh rate of your monitor, a new frame will be served to the monitor before it will have finished drawing the previous one. Remember, the monitor is drawing on the screen line by line from the top to the bottom. This means the monitor will draw the beginning of an image from the top and will continue with a new image in between. It could be for instance 40% of a frame followed by 60% of the next frame. This will generate a tearing effect in the image on screen:

Of course, you will have the same phenomena if you’re generating less frames per second than the refresh rate with V-Sync off. If the frame takes more than 16ms to be rendered, the monitor will start to redraw the same frame and will receive a new one in between a couple of ms later. So, it will continue by drawing this new frame on top of the previous one which will result as a tearing effect again. This tearing effect can be almost unseen during motion if it appears at the top or bottom of the screen. But if it occurs in the middle of the image, you’ll probably see it and it’s not great.

However, the benefit of this approach is that you’ll have the best response time to your inputs.

Let’s review V-Sync on now.

V-Sync on

There will be 2 cases again. Let’s start by the best one: your GPU is faster than the refresh rate.

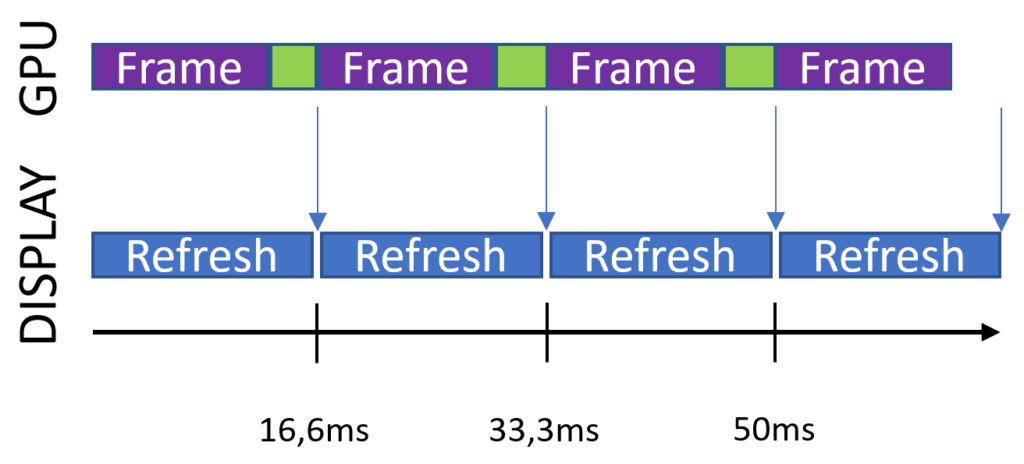

As you can see with the green boxes, the GPU will wait for the monitor signal before sending a new frame. This will remove completely the tearing as the image buffer will be synchronized with the refresh rate. The GPU will be on hold waiting for the monitor to finish its refresh job. It will then be under-utilized.

Oh, by the way, If you’d like to understand the frame buffer concept, the 2 best articles I’ve found are:

In a nutshell, the graphic card is generating a new frame and stores it inside a buffer. It’s the frame buffer. It’s been a long time since we’re often using a double buffer rendering approach. While one buffer is read and sent to the monitor to be displayed, a second buffer is used to compute the next frame. Once the first buffer has been sent to the monitor, the second buffer became the primary buffer to be used by the monitor and the first one become the cache buffer for the next frame. You can even use a triple buffering solution to digest potential high GPU peaks, but the trade-off is that you will generate higher input latency. We’re then not going further than triple buffering as the delta between the frame rendering and what you’re doing with your gamepad / mouse would be too high (input lag).

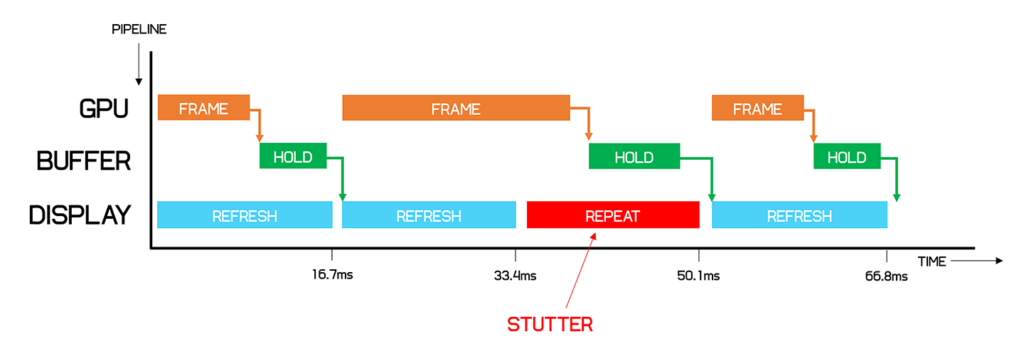

The Techspot article got a full amazing explanation of the various strategies and this graph perfectly explains what happens with V-Sync on when the framerate is below 60 fps:

This diagram is super interesting. If you’re missing the 16.6ms target, the monitor HAS to do something anyway while the GPU finishes computing the next frame. Then, the simplest idea is to redraw the previous frame from the frame buffer. But this will generate some stutter.

Wait.

This is really important to understand. If you can’t maintain a solid 60 fps rendering, the same frame will be displayed twice. This means that you will show the same frame during 33ms. This means that you will render at 30 fps! To be exact you will alternate between either a 30 fps or 60 fps rendering on some frames which is far from being a nice experience. Double or triple buffering can absorb small temporal loss of a frame rate but if the average framerate is below 60 fps, you will still end up having some stuttering.

This is why consoles are usually targeting 30 fps rather 60 fps. The cost to switch from 30 to 60 fps is super high. It’s not interesting to be in-between. Let’s say that your GPU can render your game at 45 fps, it will be rendered on a fixed refresh rate 60 hz monitor at 30 fps because of the above explanation. You can’t be in-between (well, except on some monitors that can be set to run at 45 Hz). On a console, as you’ve got a fixed hardware, if you’re running at 45 fps, it’s a better idea to use this 15 fps additional budget on something else: more advanced graphic rendering, more detailed objects, better AI and so on. To run a game at 60 fps, you need a very stable over 60 fps rendering. A single frame below 16ms and you’ll have a drop to 30 fps.

Still, 30 fps is already a good enough experience for the human eye. We’ve seen before it’s better to have around 40fps to start to have something really interesting for our motion perception but having a stable 30 fps is way better than having some stuttering trying to reach 60 fps.

The solution: Variable Refresh Rate

Up to now, the GPU was forced to align itself with the refresh rate of the monitor which generates so many complex issues we’ve seen before. What if we invert the master? What if the GPU would now decide when the monitor should refresh the image on screen?

This is the concept behind a variable refresh rate. The GPU will generate its frames as fast as it can and will notify in real-time the monitor rather than letting the monitor calling the GPU for a new frame like seen before. Indeed, remember than the fixed refresh rate of 60 Hz comes from the CRT era. LCD or OLED panels shouldn’t be forced to follow those rules.

Thanks to that, if the GPU is rendering at 42 fps, the monitor will refresh at 42 Hz. Even better, if the rendering varies from 30 to 60 fps, the monitor will also adapt from 30 to 60 Hz in real-time. This is then eliminating all issues: no stutter, no drop to 30 fps, best input lag and the overall smoothness perception will be better. Going higher than 60 Hz will depend on the underlying hardware and technology. Some monitors can go as high as 144 Hz and even more.

On PC, this technology exists either with the nVidia G-Sync implementation or AMD FreeSync. On console, the Xbox One S and Xbox One X are already supporting FreeSync. The next generation of console PS5 and Xbox Series X will also support VRR over HDMI 2.1 up to 120 Hz. Very recently, some Samsung QLED and LG OLED TV have added some support for VRR.

We can at last free ourselves from the original Tesla decision to limit ourselves to fixed 60 Hz refresh rate!

Well, you should now better understand the difference between frame rate and refresh rate. You should also better understand why it’s better targeting 30 fps if 60 fps can’t be render at a very stable rate but why 60+ fps is much better to have when possible.

The next article will be dedicated on explaining what pixel and vertex shaders are: Understanding Shaders, the secret sauce of 3D engines.

https://www.davrous.com/2020/02/07/publishing-your-pwa-in-the-play-store-in-a-couple-of-minutes-using-pwa-builder/

Error ! Json

This is fixed! Thanks.